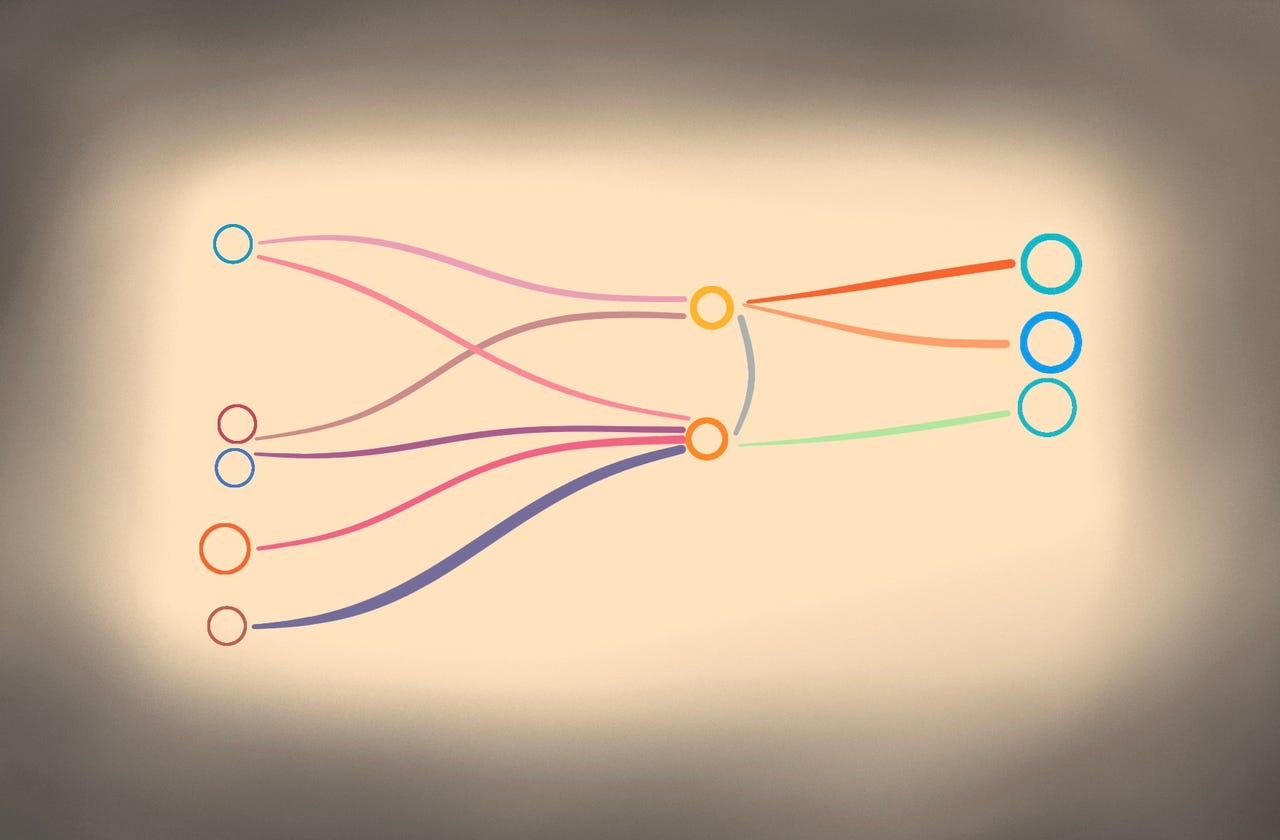

A neural network transforms input, the circles on the left, to output, on the right. How that happens is a transformation of weights, center, which we often confuse for patterns in the data itself.

Tiernan Ray forIt's a commonplace of artificial intelligence to say that machine learning, which depends on vast amounts of data, functions by finding patterns in data.

The phrase, "finding patterns in data," in fact, has been a staple phrase of things such as data mining and knowledge discovery for years now, and it has been assumed that machine learning, and its deep learning variant especially, are just continuing the tradition of finding such patterns.

AI programs do, indeed, result in patterns, but, just as "The fault, dear Brutus, lies not in our stars but in ourselves," the fact of those patterns is not something in the data, it is what the AI program makes of the data.

Almost all machine learning models function via a learning rule that changes the so-called weights, also known as parameters, of the program as the program is fed examples of data, and, possibly, labels attached to that data. It is the value of the weights that counts as "knowing" or "understanding."

The pattern that is being found is really a pattern of how weights change. The weights are simulating how real neurons are believed to "fire", the principle formed by psychologist Donald O. Hebb, which became known as Hebbian learning, the idea that "neurons that fire together, wire together."

Also: AI in sixty seconds

It is the pattern of weight changes that is the model for learning and understanding in machine learning, something the founders of deep learning emphasized. As expressed almost forty years ago, in one of the foundational texts of deep learning, Parallel Distributed Processing, Volume I, James McClelland, David Rumelhart, and Geoffrey Hinton wrote,

What is stored is the connection strengths between units that allow these patterns to be created [...] If the knowledge is the strengths of the connections, learning must be a matter of finding the right connection strengths so that the right patterns of activation will be produced under the right circumstances.

McClelland, Rumelhart, and Hinton were writing for a select audience, cognitive psychologists and computer scientists, and they were writing in a very different age, an age when people didn't make easy assumptions that anything a computer did represented "knowledge." They were laboring at a time when AI programs couldn't do much at all, and they were mainly concerned with how to produce a computation - any computation - from a fairly limited arrangement of transistors.

Then, starting with the rise of powerful GPU chips some sixteen years ago, computers really did begin to produce interesting behavior, capped off by the landmark ImageNet performance of Hinton's work with his graduate students in 2012 that marked deep learning's coming of age.

As a consequence of the new computer achievements, the popular mind started to build all kinds of mythology around AI and deep learning. There was a rush of really bad headlines likening the technology to super-human performance.

Also: Why is AI reporting so bad?

Today's conception of AI has obscured what McClelland, Rumelhart, and Hinton focused on, namely, the machine, and how it "creates" patterns, as they put it. They were very intimately familiar with the mechanics of weights constructing a pattern as a response to what was, in the input, merely data.

Why does all that matter? If the machine is the creator of patterns, then the conclusions people draw about AI are probably mostly wrong. Most people assume a computer program is perceiving a pattern in the world, which can lead to people deferring judgment to the machine. If it produces results, the thinking goes, the computer must be seeing something humans don't.

Except that a machine that constructs patterns isn't explicitly seeing anything. It's constructing a pattern. That means what is "seen" or "known" is not the same as the colloquial, everyday sense in which humans speak of themselves as knowing things.

Instead of starting from the anthropocentric question, What does the machine know? it's best to start from a more precise question, What is this program representing in the connections of its weights?

Depending on the task, the answer to that question takes many forms.

Consider computer vision. The convolutional neural network that underlies machine learning programs for image recognition and other visual perception is composed of a collection of weights that measure pixel values in a digital image.

The pixel grid is already an imposition of a 2-D coordinate system on the real world. Provided with the machine-friendly abstraction of the coordinate grid, a neural net's task of representation boils down to matching the strength of collections of pixels to a label that has been imposed, such as "bird" or "blue jay."

In a scene containing a bird, or specifically a blue jay, many things may be happening, including clouds, sunshine, and passers by. But the scene in its entirety is not the thing. What matters to the program is the collection of pixels most likely to produce an appropriate label. The pattern, in other words, is a reductive act of focus and selection inherent in the activation of neural net connections.

You might say, a program of this kind doesn't "see" or "perceive" so much as itfilters.

Also: A new experiment: Does AI really know cats or dogs -- or anything?

The same is true in games, where AI has mastered chess and poker. In the "full-information" game chess, mastered by DeepMind's AlphaZero program, the machine learning task boils down to crafting a probability score at each moment of how much a potential next move will lead ultimately to win, lose or draw.

Because the number of potential future game board configurations cannot be calculated even by the fastest computers, the computer's weights cut short the search for moves by doing what you might callsummarizing. The program summarizes the likelihood of a success if one were to pursue several moves in a given direction, and then compares that summary to the summary of potential moves to be taken in another direction.

Whereas the state of the board at any moment - the position of pieces, and which pieces remain - might "mean" something to a human chess grandmaster, it's not clear the term "mean" has any meaning for DeepMind's AlphaZero for such a summarizing task.

A similar summarizing task is achieved for the Pluribus program that in 2019 conquered the hardest form of poker, No-limit Texas hold'em. That game is even more complex in that it has hidden information, the players' face down cards, and additional "stochastic" elements of bluffing. But the representation is, again, a summary of likelihoods by each turn.

Even in programs that handle human language, what's in the weights is different from what the casual observer might suppose. GPT-3, the top language program from OpenAI, can produce strikingly human-like output in sentences and paragraphs.

Does the program "know" language? Its weights hold a representation of the likelihood of how individual words and even whole strings of text are found in sequence with other words and strings.

You could call that function of a neural net a summary similar to AlphaGo or Pluribus, given that the problem is rather like chess or poker. But the possible states to be represented as connections in the neural net are not just vast, they are infinite given the infinite composability of language.

On the other hand, given that the output of a language program such as GPT-3, a sentence, is a fuzzy answer rather than a discrete score, the "right answer" is somewhat less demanding than the win, lose or draw of chess or poker. You could also call this function of GPT-3 and similar programs an "indexing" or an "inventory" of things in their weights.

Also: What is GPT-3? Everything your business needs to know about OpenAI's breakthrough AI language program

Do humans have a similar kind of inventory or index of language? There doesn't seem to be any indication of it so far in neuroscience. Likewise, in the expression "to tell the dancer from the dance," does GPT-3 spot the multiple levels of significance in the phrase, or the associations? It's not clear such a question even has a meaning in the context of a computer program.

In each of these cases - chess board, cards, word strings - the data are what they are: a fashioned substrate divided in various ways, a set of plastic rectangular paper products, a clustering of sounds or shapes. Whether such inventions "mean" anything, collectively, to the computer, is only a way of saying that a computer becomes tuned in response, for a purpose.

The things such data prompt in the machine - filters, summarizations, indices, inventories, or however you want to characterize those representations - are never the thing in itself. They are inventions.

Also: DeepMind: Why is AI so good at language? It's something in language itself

But, you may say, people see snowflakes and see their differences, and also catalog those differences, if they have a mind to. True, human activity has always sought to find patterns, via various means. Direct observation is one of the simplest means, and in a sense, what is being done in a neural network is a kind of extension of that.

You could say the neural network reveals what was always true in human activity for millennia, that to speak of patterns is a thing imposed on the world rather than a thing in the world. In the world, snowflakes have form but that form is only a pattern to a person who collects and indexes them and categorizes them. It is a construction, in other words.

The activity of creating patterns will increase dramatically as more and more programs are unleashed on the data of the world, and their weights are tuned to form connections that we hope create useful representations. Such representations may be incredibly useful. They may someday cure cancer. It is useful to remember, however, that the patterns they reveal are not out there in the world, they are in the eye of the perceiver.

Also: DeepMind's 'Gato' is mediocre, so why did they build it?

Etiquetas calientes:

Inteligencia Artificial

innovación

Etiquetas calientes:

Inteligencia Artificial

innovación