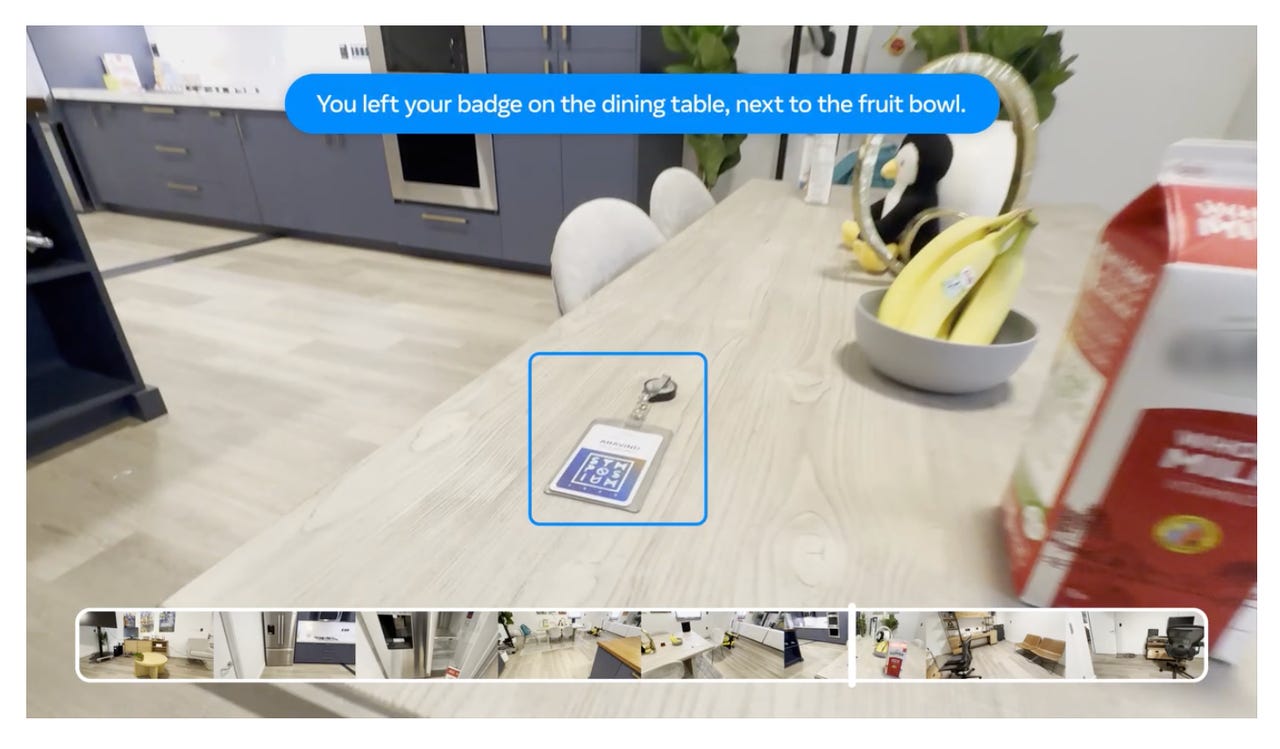

An example of how Meta's OpenEQA could create embodied intelligence in the home

Meta wants to help AI understand the world around it -- and get smarter in the process. The company on Thursday unveiled Open-Vocabulary Embodied Question Answering (OpenEQA) to showcase how AI could understand the spaces around it. The open-source framework is designed to give AI agents sensory inputs that allow it to gather clues from its environment, "see" the space it's in, and otherwise provide value to humans who will ask for AI assistance in the abstract.

"Imagine an embodied AI agent that acts as the brain of a home robot or a stylish pair of smart glasses," Meta explained. "Such an agent needs to leverage sensory modalities like vision to understand its surroundings and be capable of communicating in clear, everyday language to effectively assist people."

Also: Meta unveils second-gen AI training and inference chip

Meta provided a host of examples of how OpenEQA could work in the wild, including asking AI agents where users placed an item they need or if they still have food left in the pantry.

"Let's say you're getting ready to leave the house and can't find your office badge. You could ask your smart glasses where you left it, and the agent might respond that the badge is on the dining table by leveraging its episodic memory," Meta wrote. "Or if you were hungry on the way back home, you could ask your home robot if there's any fruit left. Based on its active exploration of the environment, it might respond that there are ripe bananas in the fruit basket."

It sounds like we're well on our way to an at-home robot or pair of smart glasses that could help run our lives. There's still a significant challenge in developing such a technology, however: Meta found that vision+language models (VLMs) perform woefully. "In fact, for questions that require spatial understanding, today's VLMs are nearly 'blind'-access to visual content provides no significant improvement over language-only models," Meta said.

This is precisely why Meta made OpenEQA open source. The company says that developing an AI model that can truly "see" the world around it as humans do, can recollect where things are placed and when, and then can provide contextual value to a human based on abstract queries, is extremely difficult to create. The company believes a community of researchers, technologists, and experts will need to work together to make it a reality.

Also: Meta will add AI labels to Facebook, Instagram, and Threads

Meta says that OpenEQA has more than 1,600 "non-templated" question and answer pairs that could represent how a human would interact with AI. Although the company has validated the pairs to ensure they can be answered correctly by the algorithm, more work needs to be done.

"As an example, for the question 'I'm sitting on the living room couch watching TV. Which room is directly behind me?', the models guess different rooms essentially at random without significantly benefitting from visual episodic memory that should provide an understanding of the space," Meta wrote. "This suggests that additional improvement on both perception and reasoning fronts are needed before embodied AI agents powered by such models are ready for primetime."

So, it's still early days. If OpenEQA shows anything, however, it's that companies are working really hard to get us AI agents that can reshape how we live.

Etiquetas calientes:

innovación

Etiquetas calientes:

innovación