Edge computing has become the IT industry's hot "new" term. Media outlets, vendors (including Cisco!), andanalysts alike are all touting the value of edge computing, particularly for Internet of Things (IoT) implementations. While most agree that there are benefits to processing compute functions "at the edge," coming to an agreement on what exactly constitutes the edge is another thing altogether.

Since publishing my introduction to edge computing blog post and giving a presentation on the topic at Cisco Live Barcelona, I've encountered various viewpoints. So, I thought it would be helpful to establish where exactly is the edge - at least from Cisco's point of view.

In 2015, Dr. Karim Arabi, vice president, engineering at Qualcomm Inc., defined edge computing as, "All computing outside cloud happening at the edge of the network."

Dr. Arabi's definition is commonly agreed upon. However, at Cisco - the leader in networking - we see the edge a little differently. Our viewpoint is that the edge is anywhere that data is processedbeforeit crosses the Wide Area Network (WAN). Before you start shaking your head in protest, hear me out.

The argument for edge computing goes something like this: By handling the heavy compute processes at the edge rather than the cloud, you reduce latency and can analyze and act on time-sensitive data in real-time - or very close to it. This one benefit - reduced latency - is huge.

Reducing latency opens up a host of new IoT use cases, most notably autonomous vehicles. If an autonomous vehicle needs to break to avoid hitting a pedestrian, the data must be processed at the edge. By the time the data gets to the cloud and instructions are sent back to the car, the pedestrian could be dead.

The other often cited benefit of edge computing is the bandwidth or cost required to send data to the cloud. To be clear, there's plenty of bandwidth available to send data to the cloud. Bandwidth is not the issue. The issue is thecostof that bandwidth. Those costs are accrued when you hit the WAN - it doesn't matter where the data is going. In a typical network the LAN is a very cheap and reliable link, whereas the WAN is significantly more expensive. Once it hits the WAN, you're accruing higher costsand latency.

If we can agree that reduced latency and reduced cost are key characteristics of edge computing, then sending data over the WAN - even if it's to a private data center in your headquarters - is NOT edge computing. To put it another way, edge computing means that data is processed before it crosses any WAN, and therefore is NOT processed in a traditional data center, whether it be a private or public cloud data center.

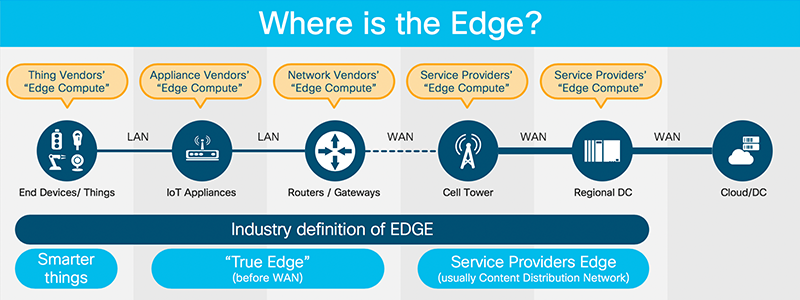

The following picture illustrates the typical devices in an Industrial IoT solution and who claims to have "Edge Compute" in this topology:

As you can see, the edge is relative. The service provider's edge is not the customer's edge. But the most important difference between the edge compute locations depicted is the network connectivity. End devices, IoT appliances, and routers are connected via the LAN - maybe Wi-Fi or Gigabit Ethernet cable. That is usually a very reliable and cheap link. The link between the routers/gateways and cell tower, is the most critical. That's the last mile from the service provider. It introduces the most latency and is the most expensive for the end customer. It is the 5G or 4G uplink. Once you're on the cell tower, the provider has fiber and you're safe from a throughput perspective, but then you're looking at increasing costs.

As you can also infer from the graphic, end devices should be excluded from edge compute because it can be near impossible to draw the line between things, smart things and edge compute things.

We can take the concept of edge computing a step further and assert that it's not new at all. In fact, we as an IT industry have been doing edge computing for quite some time. Remember how we learned about the cyclic behavior of compute centralization and compute decentralization? Edge compute is basically the latest term for decentralized compute.

The edge can mean very different things to different organizations, depending on the network infrastructure and use case. However, if you think about the edge in terms of the benefits you want to achieve, then it becomes clear very quickly where the edge of your IT environment begins and ends.

In the future, I'll explore some use cases to illustrate how the Cisco definition of edge computing applies in the real world. For now, I encourage you to read An Introduction to edge computing and use case examples. You can also watch the presentation on which this blog is based.

What is Edge Computing?

Etiquetas calientes:

computación

Internet of Things (IoT)

Cisco Industrial IoT (IIoT)

datos

Computación de vanguardia

WAN

LAN

edge

latency

Etiquetas calientes:

computación

Internet of Things (IoT)

Cisco Industrial IoT (IIoT)

datos

Computación de vanguardia

WAN

LAN

edge

latency