It sometimes feels like everyone everywhere is dabbling in generative artificial intelligence (AI). From developers who are producing code to marketers who are creating content, people in all kinds of roles are finding ways to boost their productivity with emerging technology.

The rush to take advantage of AI means analyst Gartner believes more than 80% of enterprises will be using generative AI application programming interfaces, models, and software in production environments by 2026.

Also: AI is transforming organizations everywhere. How these 6 companies are leading the way

But it's worth remembering that, despite the hype associated with generative AI, many enterprises are not dabbling in the technology -- at least not officially.

Generative AI is still at the exploratory stage for most businesses, with Gartner reporting that less than 5% of enterprises use the technology in production

Lily Haake, head of technology and digital executive search at recruiter Harvey Nash, says in a video chat with that her work with clients suggests big-bang AI projects are off the agenda for now.

"I'm seeing really impressive small pilots, such as legal clients using AI to generate documents to scan caseloads and make people more productive," she says. "It's all very exciting and positive. But it's at a small scale. And that's because my clients don't seem to be exploring generative AI on such a major scale that it's transformative for the entire business."

Rather than using AI to change organizational operations and improve customer services, most digital leaders are experimenting at the edges of the enterprise before thinking about how to bring new generative services into the core.

However, just because the business hasn't mandated the use of generative AI doesn't mean professionals aren't already using the technology -- with or without the say-so of the boss.

Also: Generative AI advancements will force companies to think big and move fast

Research by technology specialist O'Reilly suggests 44% of IT professionals already use AI in their programming work, and 34% are experimenting with it. Almost a third (32%) of IT professionals are using AI for data analytics, and 38% are experimenting with it.

Mike Loukides, author of the report, says O'Reilly is surprised at the level of adoption.

But while he describes the growth of generative AI as "explosive", Loukides says enterprises could slide into an "AI winter" if they ignore the risks and hazards that come with hurried adoption of the technology.

That's a sentiment that resonates with Avivah Litan, distinguished VP analyst at Gartner, who suggests CIOs and their C-suite peers can't afford to just sit back and wait.

Also: The best AI chatbots: ChatGPT and other noteworthy alternatives

"You need to manage the risks before they manage you," she says in a one-to-one video interview with .

Litan says Gartner polled 700-plus executives about the risks of generative AI in a webinar recently and discovered CIOs are most concerned about data privacy, followed by hallucinations, and then security.

Let's consider each of those areas in turn.

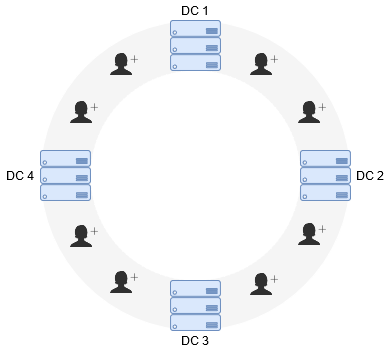

CIOs and other senior managers who implement an enterprise version of generative AI will likely send their data to their vendor's hosted environments.

Litan acknowledges that kind of arrangement is nothing new -- organizations have been sending data to the cloud and software-as-a-service providers for a decade or more.

However, she says, CIOs believe AI involves a different kind of risk, particularly around how vendors store and use information, such as for training their own large language models.

Also: Cybersecurity 101: Everything on how to protect your privacy and stay safe online

Litan says Gartner has completed a detailed analysis of many of the IT vendors that are offering AI-enabled services.

"The bottom line with data protection is that, if you're using a third-party foundation model, it's all about trust, but you can't verify," she says. "So, you must trust that the vendors have good security practices in place and your data is not going to leak. And we all know mistakes are made in cloud systems. If your confidential data leaks, the vendors won't be liable -- you will."

As well as assessing data protection risks across external processes, organizations need to be aware of how employees use data in generative AI applications and models.

Litan says these kinds of risks cover the unacceptable use of data, which can compromise the decision-making process, including being slack with confidential inputs, producing inaccurate hallucinations as outputs, and using intellectual property from another company.

Also: The ethics of generative AI: How we can harness this powerful technology

Add in ethical issues and fears that models can be biased, and business leaders face a confluence of input and output risks.

Litan says executives managing the rollout of generative AI must ensure people across the business take nothing for granted.

"You've got to make sure that you're using data and generative AI in a way that's acceptable to your organization; you're not giving away the keys to your kingdom, and that what's coming back is vetted for inaccuracies and hallucinations," she says.

Businesses deal with a range of cybersecurity risks on a day-to-day basis, such as hackers gaining access to enterprise data due to a system vulnerability or an error by an employee.

However, Litan says AI represents a different threat vector.

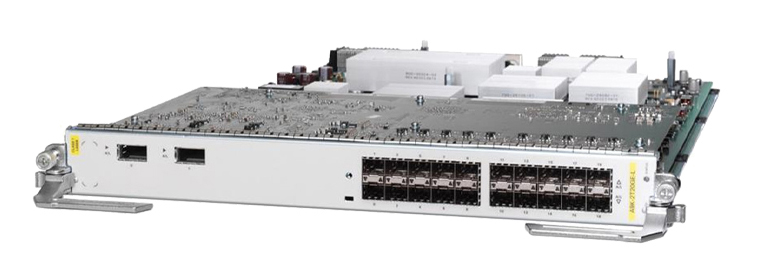

"These are new risks," she says. "There's prompt injection attacks, vector database attacks, and hackers can get access to model states and parameters."

Also: How AI can improve cybersecurity by harnessing diversity

While potential risks include data and money loss, attackers could also choose to manipulate models and swap out good data for bad.

Litan says this new threat vector means businesses can't deal with new risks by simply using old, tried-and-tested measures.

"Attackers can poison the model," she says. "You'll have to put security around the model and it's a different kind of security. Endpoint protection is not going to help you with data model protection."

This combination of risks from generative AI might seem like an intractable challenge for CIOs and other senior executives.

However, Litan says there are new solutions evolving as quickly as the risks and opportunities associated with generative AI are emerging.

"You don't need to sit there and panic," she says.

"There is a new market that's evolving. As you can imagine, when there are problems, there are entrepreneurs who want to make money off those problems."

Also: AI and automation: Business leaders adopt small-scale solutions for greater impact

The good news is that workable solutions are on their way. And while the technology market settles, Litan says business leaders should get prepared.

"The bottom-line advice we give CIOs is, "Get organized, then define your acceptable use policies. Make sure your data is classified and you have access management." she says.

"Set up a system where users can send in their application requests, you know what data they're using, the right people approve these requests, and you check the process twice a year to make sure it's being enforced correctly. Just take generative AI one step at a time."

Etiquetas calientes:

innovación

Etiquetas calientes:

innovación