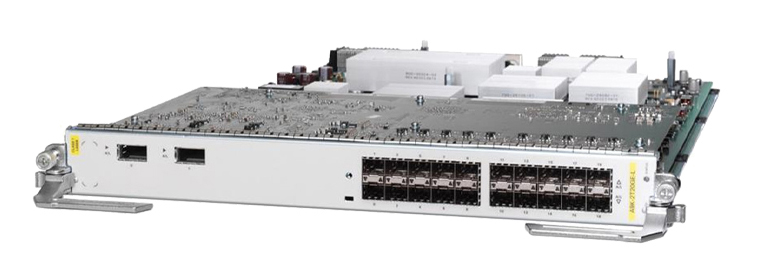

Cerebras Systems, an AI startup, unveiled a new version of its dinner-plate-sized processors, claiming that the hardware will deliver double the performance for the same price as its predecessor.

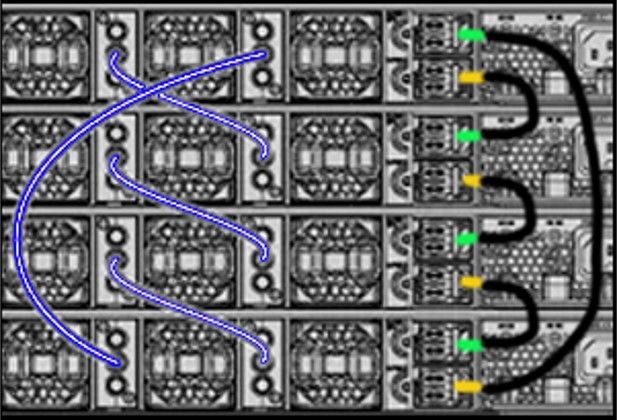

Cerebras Systems, an AI chip startup, has launched a new AI processor, the Wafer Scale Engine 3 (WSE-3), the fastest AI chip in the world with 4 trillion transistors and 900,000 AI-optimized compute cores. It was designed using TSMS's 5nm manufacturing technology. The WSE-3 will deliver 125 petaflops of peak AI performance designed to train next-generation frontier models of up to 24 trillion parameters.

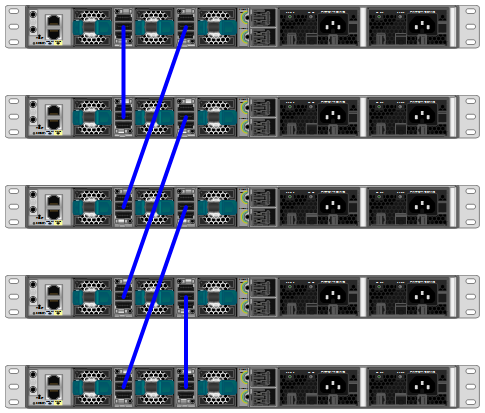

California-based Cerebras' AI chips compete with tech giant Nvidia's most powerful graphics processing unit (GPU) semiconductors, which are used to assist OpenAI in developing the underlying software that drives AI applications such as ChatGPT. Instead of putting together hundreds of chips to build and execute AI applications, Cerebras is betting that its much larger, foot-wide chip will surpass the performance of clusters of Nvidia chips.

Energy consumption has been a key concern in AI computation. Cerebras' third-generation processor will use the same amount of energy to produce higher performance, despite rising power expenses for developing, training, and running AI systems.

Etiquetas calientes:

Semiconductores semiconductores

Etiquetas calientes:

Semiconductores semiconductores