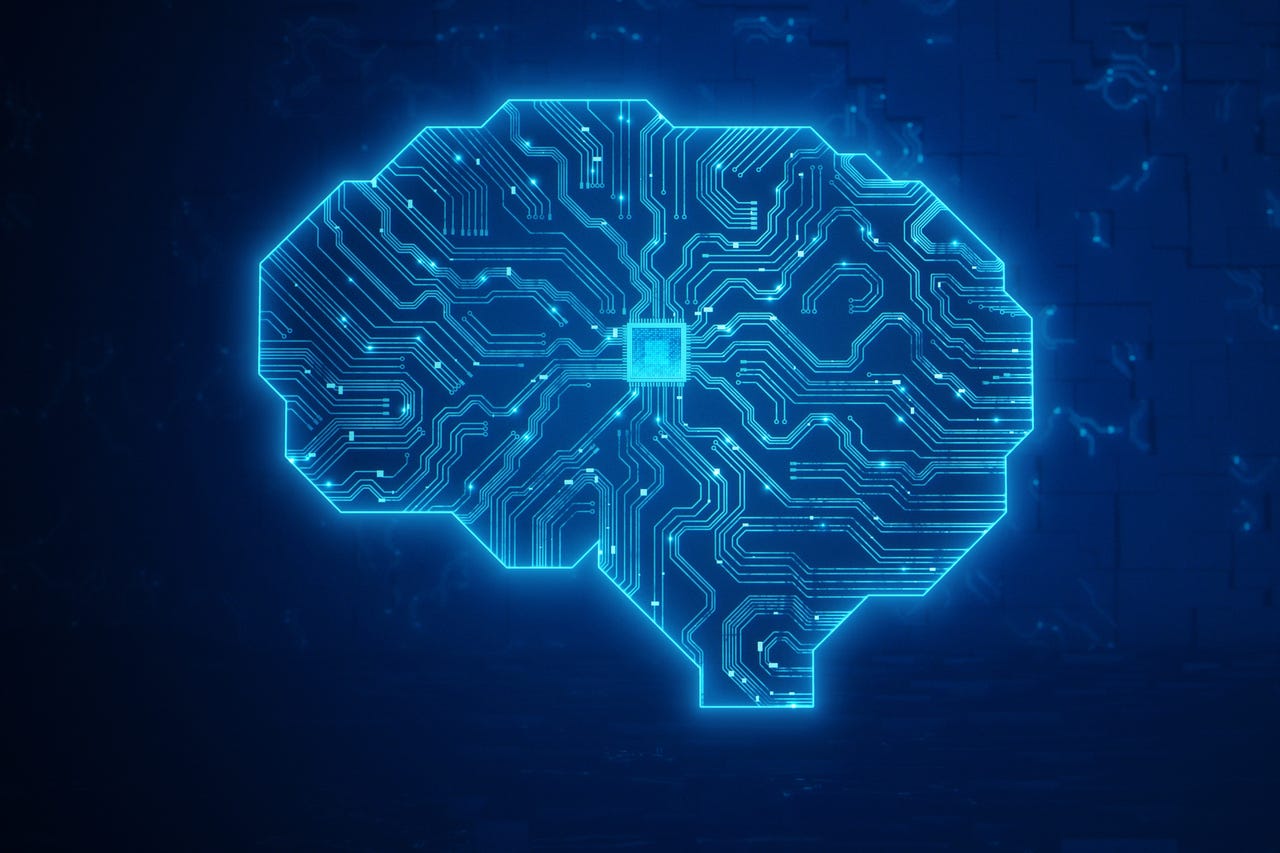

All signs seem to show that we have officially migrated from the era of the internet to that of AI. If the internet defined a new world of transformation in how we were going to consume, sell, entertain, and beyond, AI is now poised to establish yet another great leap in our collective human activity.

Experts say that AI's lightning-fast computations will bring in unparalleled new gains in productivity and innovation for you and me like never seen before.

Also: For the vision impaired, this AI robot aims to replace canes and guide dogs

We will be able to spot cancerous tumors with lightning speed, unravel the genetic code behind diseases, design new drugs, figure out carbon-free sources of energy, eliminate drudgery, and who knows what else.

Except, there are a few not-so-minor catches.

The first is that Moore's Law -- postulated by Intel's legendary co-founder Gordon Moore who said that chip density will roughly double every 18 months -- is going to hit a wall soon if it hasn't already. (It is increasingly difficult to shrink a chip more than we already have).

"In the 15 years from 1986 to 2001, processor performance increased by an average of 52 percent per year, but by 2018, this had slowed to just 3.5 percent yearly -- a virtual standstill," says Klaus ?. Mogensen in Farsight magazine.

The second problem is more dire. Artificial neural networks (ANNs), which process vast numbers of real-world datasets (big data) using silicon computing chips are spectacular guzzlers of power and water.

Training ANNs on current hardware produces colossal amounts of heat which requires equally massive amounts of cooling which is simply not cool in an era of acute climate crisis.

Also: Instant evolution: If AI can design a robot in 26 seconds, what else can it do?

According to recent estimates, in just three years, AI models and their chips will eventually soak up as much electricity as the government of Netherlands.

If this isn't bad enough, a technical challenge looms where network processing speeds will hit a wall because of the physical separation of data from the processors, a problem commonly referred to as known as the "von Neumann bottleneck."

In other words, what AI desperately needs is an epic AI hardware revolution. Fortuitously, a group of scientists have come up with just that.

It is an ingenious solution that utilizes not fabs or supercomputers but the most powerful organ known to all living species -- the human brain.

Fusing the human brain with machines -- dubbed bio-computing -- may sound outlandish, but it is becoming an increasingly popular antidote to the limitations of existing technology.

Consider the differences between the two: Neural networks ingest millions of watts of energy a day to function. By contrast, the human brain only needs 20 watts.

Energy efficiency is just one facet of the wonders of the human brain. There's another reason why artificial neural networks are designed to ape it.

Also: This AI app will soon screen for type 2 diabetes using just a 6-10 second voice clip

The organ's otherworldly design is unmatchable in its intricate structure that allows it to do things that machines can only dream of (in simulations designed by the brain, of course).

The sheer networking capacity of the human brain is hard to fathom -- close to 100 billion neurons (each connected to some 10,000 others) and a quadrillion synapses (where neurons connect), all working non-stop by firing chemical or electricity charges at each other in call and response fashion.

This incredible ratio of computing horsepower to energy use is something that Feng Guo, an associate professor of intelligent systems engineering at the Indiana University Luddy School of Informatics, Computing and Engineering, wants to exploit. (The multitalented Guo has also received$3.8 million in federal grants towards the development of a groundbreaking wearable device to detect and treat an opioid overdose in real time.)

By architecting a hybrid computing system that fuses electronic hardware with human brain "organoids," Dr. Guo and others like him are racing to produce a whole new generation of computers that easily outstrip current machines in processing speed and energy usage.

Also: Don't diss the pigeons: How nature's algorithm rivals AI

This is the promise of the new field of biocomputing, going where no machine has gone before, especially in its ability to fuse with human organs in order to advance fields like medicine.

Dr. Guo and his colleagues decided to grow a variety of brain cell cultures (early-stage and mature neurons and stem cells in addition to others) and mounted them onto a multielectrode array after which they electrically stimulated it.

The brain is unique in that electrical charges allow it to change its neural connections and actually self-learn -- something called synaptic plasticity.

This is how humans are capable of using brain training techniques to wean themselves of bad habits, or convert negative thoughts into positive ones, thereby dramatically changing one's mental makeup.

Keen to see what sort of things their primitive organoid could be capable of, Guo and his colleagues tested it by first converting 240 audio clips of eight people pronouncing Japanese vowels into audio signals. They were translated by an AI decoder and then introduced to the organoid to make sense of.

Also: 15 big ideas that will revolutionize industries and economies, led by AI

The results were startling. The organoid successfully decoded the signals into the right category of vowels with a 78% accuracy rate -- lower than today's average AI neural network but one that used minuscule amounts of power and scant training.

"Through electrical stimulation training, we were able to distinguish an individual's vowels from a speaker pool," Guo said. "With the training, we triggered unsupervised learning of hybrid computing systems."

This was perhaps the most important discovery of the organoid -- a demonstrated capability of self-learning its way toward a higher accuracy rate. It was even able to perform complex, non-linear mathematical equations.

This then could become the lynchpin of future AI processing -- the ability of a bio-computer, outfitted with the smartest brains on earth, to process, learn, and remember things all in one space versus the separated processor and memory compartments in today's machines.

In one stroke, the organoid can eliminate heat, end-route Moore's law's limitations, self-learn effortlessly, and bring an end to the memory-processor bifurcation of today's computing.

It's not so black and white, though, say experts in this field.

So, before we go off to start harvesting our own brain cells in-house in order to start building next-level machines, it's worth listening to concerns from scientists such as Lena Smirnova, an assistant professor of public health at Johns Hopkins University.

One major issue surrounds neuroethical concerns, considering there are live brain cells at work.

Also: Why US small businesses will lead in AI investments in 2024

Smirnova -- along with her colleagues Brian Caffo, and Erik C. Johnson -- also says that Guo's Brainoware hasn't yet demonstrated the ability to store information over the long haul and use it to compute things. Doing so, while keeping brain cells healthy, is a major challenge in the field, she says.

In other words, it may be best to hold off on popping the champagne until those concerns are sorted out.

That said, the scientists did hail the experiment as groundbreaking, "likely to generate foundational insights into the mechanisms of learning, neural development and the cognitive implications of neurodegenerative diseases."

Etiquetas calientes:

innovación

Etiquetas calientes:

innovación