Through a focus on more customized genAI models for enterprises and industry standard Ethernet, Intel sees a path to regaining its dominance in a new era of computing.

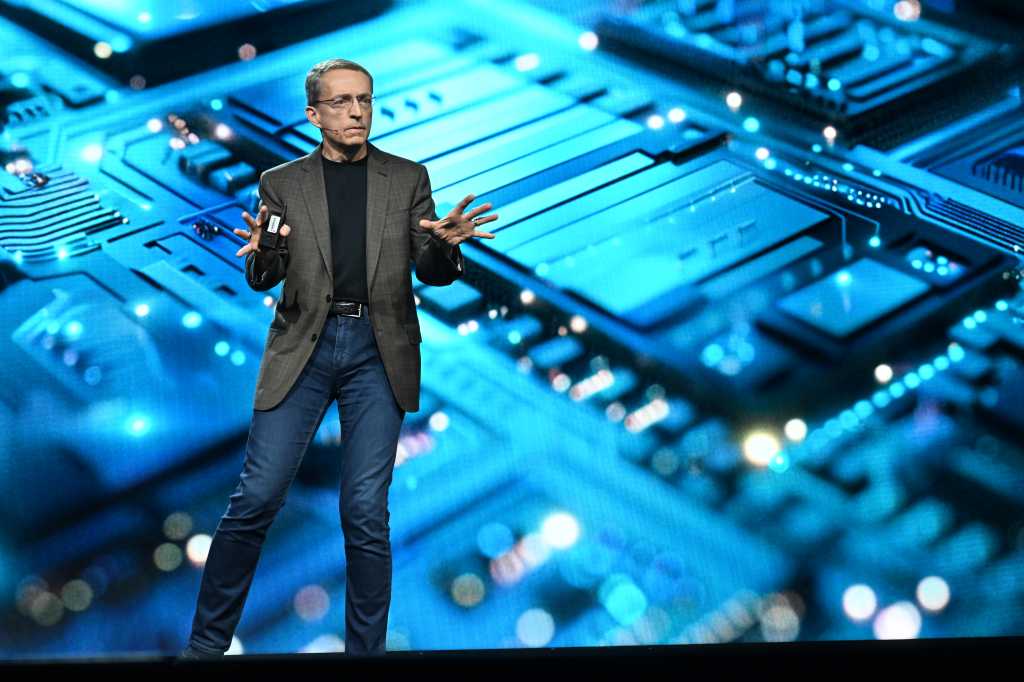

At its annual Intel Vision conference, CEO Pat Gelsinger laid out an ambitious roadmap that includes generative artificial intelligence (genAI) at every turn.

Intel's hardware strategy is centered around its new Gaudi 3 GPU, which was purpose built for training and running massive large language models (LLMs) that underpin genAI in data centers. Intel's also taking aim with its new line of Xeon 6 processors - some of which will have onboard neural processing units (NPUs or "AI accelerators") for use in workstations, PCs and edge devices. Intel also claims its Xeon 6 processors will be good enough to run smaller, more customized LLMs, which are expected to grow in adoption.

Intel's pitch: Its chips will cost less and use a friendlier ecosystem than Nvidia's.

Gelsinger's keynote speech called out Nvidia's popular H100 GPU, saying the Gaudi 3 AI accelerator delivers 50% on average better inference and 40% on average better power efficiency "at a fraction of the cost." Intel also claims Gaudi 3 outperforms the H100 for training up different types of LLMs - and can do so up to 50% faster.

The server and storage infrastructure needed for training extremely large LLMs will take up an increasing portion of the AI infrastructure market due to the LLMs' insatiable hunger for compute and data, according to IDC Research. IDC projects that the worldwide AI hardware market (server and storage), including for running generative AI, will grow from$18.8 billion in 2021 to$41.8 billion in 2026, representing close to 20% of the total server and storage

infrastructure market.

Along with its rapidly growing use in data center servers, genAI is expected to drive on-device AI chipsets for PCs and other mobile devices to more than 1.8 billion units by 2030. That's because laptops, smartphones, and other form factors will increasingly ship with on-device AI capabilities, according to ABI Research. In layman's terms, Intel wants its Xeon chips (and NPUs) to power those desktop, mobile and edge devices. Intel's next generation Core Ultra processor - Lunar Lake - is expected to launch later this year, and it will have more than 100 platform tera operations per second (TOPS) and more than 45 NPU TOPS aimed at a new generation of PCs enabled for genAI use.

While NPUs have been around for decades for machine-learning systems, the emergence of OpenAI's ChatGPT in November 2022 started an arms race among chipmakers to supply the fastest and most capable accelerators to handle rapid genAI adoption.

Intel CEO Pat Gelsinger describes the company's "AI Everywhere" strategy at its Vision 2024 conference this week.

Nvidia started with a leg up on competitors. Originally designed for computer games, Nvidia's AI chips - graphics processor units (GPUs) - are its own form of accelerators, but they're costly compared to standard CPUs. Because its GPUs positioned Nvidia to take advantage of the genAI gold rush, the company quickly became the third-most valuable company in the US. Only Microsoft and Apple surpass it in market valuation.

Industry analysts agree that Intel's competitive plan is solid, but it has a steep hill to climb to catch Nvidia, a fabless chipmaker that boasts about 90% of the data center AI GPU market and 80% of the entire AI chip market.

Over time, more than half of Nvidia's data center business will come from AI services run in the cloud, according to Raj Joshi, senior vice president for Moody's Investors Service. "The lesson has not been lost on cloud providers such as Google and Amazon, each of which have their own GPUs to support AI-centric workloads," he said.

"Essentially, there's only one player that's providing Nvidia and AMD GPUs, and that's TSMC in Taiwan, which is the leading developer of semiconductors today, both in terms of its technology and its market share," Joshi said.

Intel is not fabless. It has long dominated the design and manufacture of high-performance CPUs, though recent challenges due to genAI reflect fundamental changes in the computing landscape.

Ironically, Intel's Gaudi 3 chip is manufactured by TSMC using its 5 nanometer (nm) process technology versus the previous 7nm process.

GenAI in data centers today, edge tomorrow

Data centers will continue to deploy CPUs in large numbers to support Internet services and cloud computing, but they are increasingly deploying GPUs to support AI - and Intel has struggled to design competitive GPUs, according to Benjamin Lee, a professor at the University of Pennsylvania's School of Engineering and Applied Science.

Intel's Gaudi 3 GPU and Xeon 6 CPU comes at a lower cost with lesser power needs than Nvidia's Blackwell H100 and H200 GPUs, according to Forrester Research Senior Analyst Alvin Nguyen. A cheaper, more efficient chip will help mitigate the insatiable power demands of genAI tools while still being "performant," he said.

Accelerator microprocessors handle two primary purposes for genAI: training and inference. Chips that handle AI training use vast amounts of data to train neural network algorithms that then are expected to make accurate predictions, such as the next word or phrase in a sentence or the next image, for example. So, chips are also required to speedily infer what that answer to a prompt (query) will be.

But LLMs must be trained before they can begin to infer a useful answer to a query. The most popular LLMs provide answers based on massive data sets ingested from the Internet, but they can sometimes be inaccurate or downright bizarre, as is the case with genAI hallucinations, when the tech goes right off the rails.

Gartner Research Vice President Analyst Alan Priestley said while today's GPUs primarily support the compute-intensive training of massive LLMs, in the future businesses will want smaller genAI LLMs based on proprietary datasets - not information from an ocean outside of a company.

Nvidia's pricing for now is based on a high-performance product that does an excellent job handling the intensive needs of training up an LLM, Priestley said. And, Nvidia can charge what it wants for the product, but that means it's relatively easy for rivals to undercut it in the market.

RAG to the rescue

To that end, Intel's Gelsinger called out Intel's Xeon 6 processors, which can run retrieval augmented generation processes, or "RAG" for short. RAG optimizes the output of an LLM by referencing (accessing) an external knowledge base outside of the massive online data sets on which genAI LLMs are traditional trained. Using RAG software, an LLM could access a specific organization's databases or document sets in real time.

For example, a RAG-enabled LLM can provide healthcare system patients with medication advice, appointment scheduling, prescription refills and help in finding physicians and hospital services. RAG can also be used to ingest customer records in support of more accurate and contextually appropriate genAI-powered chatbot responses. RAG also continuously searches for and includes updates from those external sources, meaning the information used is current.

The push for RAG and more narrowly tailored LLMs ties into Intel's confidential computing and Trusted Domain security efforts, which is aimed at enabling enterprises to utilize their data while also protecting it.

"And for those models, Intel's story is that you can run them on a much smaller system - a Xeon processor. Or you could run those models on a processor augmented by an NPU," Priestley said. "Either way, you know you can do it without investing in billions of dollars in huge arrays of hardware infrastructure."

"Gaudi 3, Granite Rapids or Sierra Forrest Xeon processors can run large language models for the type of things that a business will need," Priestly said.

Intel is also betting on its use of industry standard Ethernet, pitting it against Nvidia's reliance on the more proprietary InfiniBand high-performance computer networking bus.

Ethernet or Infiniband?

During a media call this week, Intel's vice president of Xeon software, Das Kamhout, said he expects the Gaudi 3 chips to be "highly competitive" on pricing, the company's open standards, and because of its integrated on-chip network, which uses data center friendly Ethernet. The Gaudi 3 has 24 Ethernet ports, which it uses to communicate between other Gaudi chips, and then to communicate between servers.

In contrast, Nvidia uses InfiniBand for networking and a proprietary software platform called Compute Unified Device Architecture (CUDA); the programming model provides an API that lets developers leverage GPU resources without requiring specialized knowledge of GPU hardware. The CUDA platform has become the industry standard for genAI accelerated computing and only works with Nvidia hardware.

Instead of a proprietary platform, Intel is working on creating an open Ethernet networking model for genAI fabrics, and introduced an array of AI-optimized Ethernet solutions at its Vision conference. The company is working through the Ultra Ethernet Consortium (UEC) to design large scale-up and scale-out AI fabrics.

"Increasingly, AI developers...want to get away from using CUDA, which makes the models a lot more transportable," Gartner's Priestley said.

A new chip arms race

Neither Intel nor Nvidia have been able to keep up with demand caused by a firestorm of genAI deployments. Nvidia's GPUs were already in popular, which caused the company's share price to surge by almost 450% since January 2023. And it continues to push ahead: at its GTC AI Conference last month, Nvidia unveiled the successor to its H100, the Blackwell B200, which delivers up to 20 petaflops of compute power.

Meanwhile, Intel at its Vision conference called out its sixth generation of Xeon processors, which includes the Sierra Forest, the first "E-Core" Xeon 6 processor that will be delivered to customers with 144 cores per socket, "demonstrating enhanced efficiency," according to IDC Research Vice President Peter Rutten. Intel claims it has received positive feedback from cloud service providers who've tested the Sierra Forest chip.

Intel's newest line of Xeon 6 processors are being targeted for use in the data center, cloud and edge devices, but those chips will handle smaller to mid-sizes LLMs.

Intel also plans to release Granite Rapids processor in the second quarter of the year. "The product, which is being built on Intel 3nm process, shares the same base architecture as that of Sierra Forest, enabling easy portability in addition to the increased core and performance per watt and better memory speed," Rutten wrote in a report. Intel claims the Granite Rapids processor can run Llama-2 models with up to 70 billion parameters.

Intel's next-gen Xeon 6 and Core Ultra processors will be key to the company's ability to provide AI solutions across a variety of use cases, including training, tuning, and inference, in a variety of locations (i.e., end user, edge, and data center), according to Forrester's Nguyen. But, the Xeon and Core Ultra processors are being marketed at smaller to mid-sized large language models. Intel's new Gaudi 3 processor is purpose-built for genAI use and will be targeted at LLMs with 176 billion parameters or more, according to an Intel spokseperson.

"The continued AI [chip] supply chain shortages means Intel products will be in demand, guaranteeing work for both Intel products and Intel foundry," Nguyen said. "Intel's stated willingness to have other companies use their foundry services and share intellectual property - licensing technology they develop - means their reach may grow" into markets they currently do not currently address, such as mobile.

English

English Pусский

Pусский Français

Français Español

Español Português

Português

Etiquetas calientes:

Etiquetas calientes: