So you've decided you are going to run 2vCPU VMs in one, or all (GHAST!) of your VDI pools.

Why did you decide this?

Because your application is multi-threaded and you need better response times?

Or simply because ((2 > 1) = TRUE) all the time?

What DOES 2 vCPU VMs really get you in your VDI environment?

Well, we wanted to know as well. But I'm going to twist this question a little bit to satisfy my blogging agenda: How can I get the most out of 2vCPU VMs in my VDI environment?

To answer these questions and more, we tested this combination in several variants and flavors, and are posting some conclusions in our blog series "VDI The Missing Questions:"

You are Invited! If you've been enjoying our blog series, please join us for a free webinar discussing the VDI Missing Questions, with Doron, Shawn and myself (Jason)! Access the webinar here!

A little history: I've seen this many times in my years doing Virtual Infrastructure consulting work: "Let's set the default template that we are going to deploy all of our workloads on to 2vCPUs." Or "Our desktops are multi-core, so every VM must have multiple CPUs!"

As we have shown in our series though, there is a cost to this blanket decision. An EXPENSIVE cost. But hold that thought for a second...there must be some upside somewhere with more vCPUs...and there is...check this graph out:

We tested on our Cisco UCS B200 M3 with two E5-2665 CPUs and 256GB of RAM. The graph is zoomed into our first 45 sessions per server to allow us to concentrate on latency and compare the 2vCPU VMs to the 1 vCPU VMs running identical workloads. What do you see, beside the weird bump at 30 sessions in the 1vCPU chart (we disregarded as it didn't show in further testing)?

What matters for this discussions is that we generally see better latency on the 2vCPU sessions versus the 1vCPU sessions.

HAHA! the 2vCPU fans cry out: POINT MADE: 2 is greater than 1!!! And they'd be right,that is if they only want to run 45 sessions per server.

Ready to do that to your VDI environment?

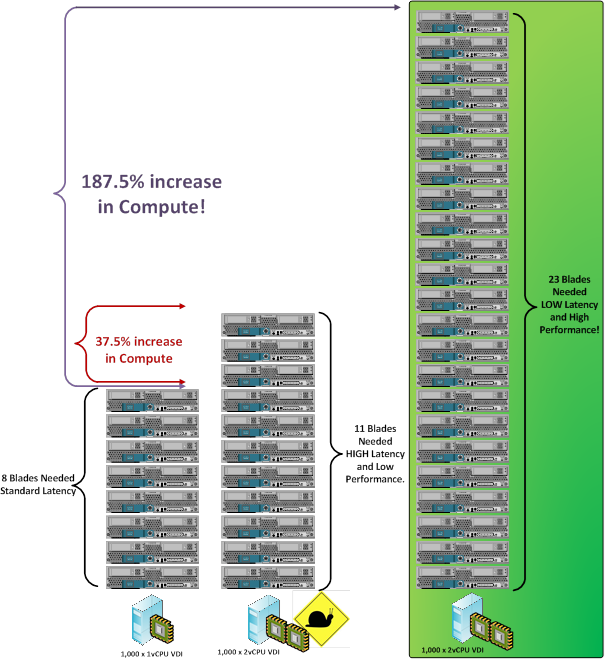

Ok then, if you truly want the lower latency advantage that we just proved that the 2vCPU VMs can deliver, then here is a visual depiction in the 1000 user example environment that Shawn discussed of the results to your data center:

From 8 servers needed for 1vCPU Sessions, to 11 servers needed for 2vCPU sessions with similar latancy, to 23 servers needed to truly recognize the latency benefits from 2vCPUs for this workload, the server counts drastically increase. From a sales perspective, sure we and every other server manufacturer would love every VDI shop to make this decision...heck, Mr. Customer; How can I convince you to do 4 vCPUs sessions? 4 > 2 > 1 = VERY TRUE!

WRONG.

All joking aside, I am an engineer, and I did manage my own IT infrastructure in a previous life, including the budgeting behind it. I would like to know that these impacts are very real to the environment. What this testing does show is that, yes, in fact there is an area of scaling that 2vCPU VMs can provide latency benefits to your end users. So listen, and listen closely:

In order to truly get the benefits of this better responsiveness with 2vCPU VMs, you absolutely positively MUST limit the number of sessions on your server.

The graph shows this, but follow my logic:

That, my friends, results in poor latency and an unhappy end user .

Therefore, to get the most out of your 2vCPU VMs and truly decrease end user latency with them,you must ensure that the VMs can get access to those physical CPU cores. This can only be achieved by limiting session count per server. After 45 sessions per server in this workload simulation,not only do 1vCPU VMs outperform their 2vCPU counterparts, we can get significantly more of them on the same system.

Next week: Doron discusses how memory speed affects VDI scalability.

Etiquetas calientes:

Etiquetas calientes: